An Interview...

Dr. Paula Tallal – Neuroscience, Phonology and Reading: The Oral to Written Language Continuum

Dr. Paula Tallal is Board of Governor's Chair of Neuroscience and Co-Director of the Center for Molecular and Behavioral Neuroscience at Rutgers University and Co-Founder and a Director of Scientific Learning Corp. A world-recognized authority on language-learning disabilities, she is active on many scientific advisory boards and government committees for both developmental language disorders and learning problems. Additional bio info

Dr. Paula Tallal is Board of Governor's Chair of Neuroscience and Co-Director of the Center for Molecular and Behavioral Neuroscience at Rutgers University and Co-Founder and a Director of Scientific Learning Corp. A world-recognized authority on language-learning disabilities, she is active on many scientific advisory boards and government committees for both developmental language disorders and learning problems. Additional bio info

We found Dr. Tallal to be a brilliant neuroscientist with a deep compassion for the plight of children who suffer from language learning difficulties.

Video: Part 5 of 11: Understanding and Teaching Reading

The entire interview is available for online viewing:

click here for the video index

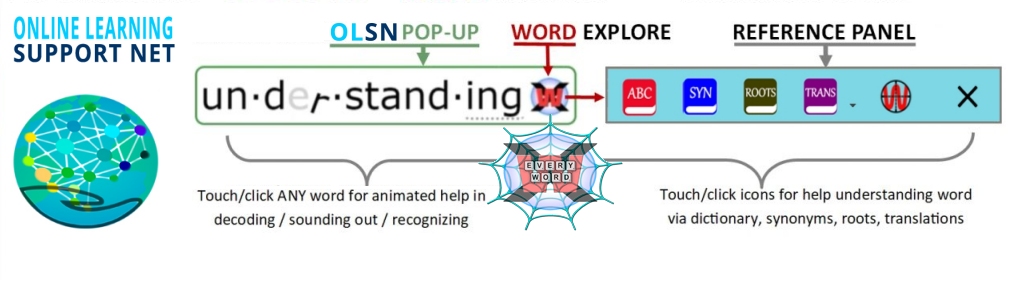

Note: Remember to click on any word on this page to experience the next evolutionary step in technology supported reading.

Note: Remember to click on any word on this page to experience the next evolutionary step in technology supported reading.

-----------------------------

Personal Background:

David Boulton: Let’s start with a sketch on yourself and how you came to be here.

Dr. Paula Tallal: I actually got interested in literacy by way of my long-term interest in language and the brain. My first experience was working with adult patients who had lost their language as a result of brain damage. I was just absolutely amazed and horrified that you could lose the ability to communicate, to express yourself, or even understand what other people said. So, I’ve been interested since early in my education, from that early experience I had being able to work as a volunteer in an aphasia unit.

When I went to graduate school I became interested in children who were having difficulty developing the ability to talk even though they seemed to be developing quite normally and healthily in all other ways. And at the time there was not that much known about these children, other than that there were lots of ways you could end up with difficulty learning to talk. Of course, if you had a hearing problem or were deaf it would be very difficult to learn oral language. If you had severe mental retardation you might not have the capacity for language. If you had difficulty in moving your mouth or had an oral motor difficulty or a cleft palate or something like that, that could impact your language development.

But there still remained a large group of children, and recent epidemiology research actually suggests it’s as high as almost eight percent of children who, even after you exclude all these other known reasons, nonetheless struggle to learn oral language. And longitudinal research, that I and other people have done, following children with early oral language developmental delays, find that there is a high coincidence of these children ultimately developing difficulty in reading, writing, and particularly spelling.

I became interested in the whole continuum between oral and written language and particularly what we could learn by studying children who are struggling. My particular interest was how the brain does it. There are a lot of different aspects of research that have to do with looking at children who are struggling to learn to talk or learn to read and try to figure out what’s the problem – especially so we can learn to develop more effective remediation, intervention programs. The area that has always interested me most is how the brain develops the representations of the sounds of speech and puts those sounds together to make words and words together to make sentences and ultimately the interactions that we have when we communicate with each other. So, my interest has always been in understanding the neural and biological underpinnings of language development and disorders.

Oral and Written Language:

Dr. Paula Tallal: By language I mean language in the broader sense both oral and written language, because after all, written language must stand on the shoulder of oral language. It’s not that you can’t learn to read if you don’t have an oral language, but it’s very difficult. Even for deaf individuals who have a completely full blown sign language system, if they don’t have a phonological base to that sign system – the ability to hear what the individual sounds are like inside of words – it’s very difficult to learn to read.

And that’s also another clue as to what reading is all about. When we look at a child learning to read we certainly see the visual side of it. When most people think about reading they think about the visual side of reading because it’s so obvious. There’s the squiggles on the page and that’s what you’re going to have to learn to put together. That’s the code, or at least that’s what people have thought for years was the code. But it turns out that that code has to be decoded in terms of the sounds that are made inside of words because those letters actually have to come to represent not the words, but the sounds inside of words.

Phonological Awareness:

Dr. Paula Tallal: And the real key to learning to read is becoming what is known in the field as phonologically aware. Phonological awareness means knowledge – the awareness that words can actually be broken down into smaller parts and those parts are called phonemes or speech sounds. And the phonemes build words both for oral language and for written language. And it turns out that children who have difficulty with written language as a group, not all of them but the large majority of them, have difficulty in becoming phonologically aware and playing little word games. Being able to know that the word plate without the /p/ is late. Now, people who have coded the whole word who can say the word plate perfectly well – unless they’re phonologically aware that they can get inside the word, they have very great difficulty in knowing that plate without the /p/ would make the word late. Or plate without the /t/ would make the word plae.

So, these were clues that this has nothing to do with the visual aspects of language. It has something to do with the acoustics of language, what the sounds actually sound like inside of words. Now those two things come together – the fact that children who have difficulty with reading on the whole have difficulty with the smaller sounds inside of words, the phonemes. And also the fact that children who have significant difficulty learning to talk also have difficulty with the sounds inside of words, but their difficulty shows up much earlier in life. And also the fact that children who have trouble with oral language generally will go on to have difficulty with written language, even if it’s more subtle difficulty later on with spelling.

The Oral to Written Language Continuum:

Dr. Paula Tallal: Those all come together to form what I consider to be an oral to written language continuum. And I’ve been interested in what the brain has to tell us about how the brain learns phonology, the phonological system, and how that ultimately translates into the development of a language all the way up to the level of grammar interaction between people and also into reading.

Alright, so then how does the brain ultimately go from the little baby lying in the crib and everyone’s saying ‘Oh what a pretty little baby you are. Look at those big blue eyes.’ How do you get from all that sound that the baby is being bathed in to the point that the baby can pull out these individual sounds and know that those are indeed the sounds that they’re going to have to use to build up their own language and their own reading system?

One hypothesis is that you’re just born with that, that it’s innate, that it’s pre-specified that you know it. But that can’t possibly be the case because you don’t know which language you’re going to be born into. You don’t know what people are going to say to you and so it can’t be just that this is innate. There might be structures in the brain that have developed over time that make it more likely that a human being is going to be able to pull this code apart better than a non human. But that doesn’t really answer the question as to what really has to happen.

So, clearly the baby is lying in its crib, it doesn’t know which language it’s going to be exposed to. It may have even already heard some sounds in utero; we have some evidence from research that that’s the case. But nonetheless, the baby’s brain’s job is to basically chop apart the sounds that its hearing and to figure out which ones are going to be meaningful as the building blocks or the phonemes for their language.

How the Brain Learns:

Dr. Paula Tallal: That brings us into how the brain learns in general. The brain seems to learn by looking for consistencies, looking for events that repeat themselves frequently. And those events are usually made up of visual input, auditory input, feeling in the mouth for the baby, and feeling on the body the sensory events of the world. And the baby’s brain’s job is to begin to understand and to code neurally in the brain; to map its own brain through experiences to what’s going to matter and what’s not going to matter.

Now to begin with, it’s very well known that in fact babies can discriminate the sounds of all the languages in the world and that would make sense because they don’t know which ones are going to be important. So babies have to be, as my colleague Pat Kuhl says, citizens of the world when they’re first born. But very quickly, within the first six months of life, babies come to only be able to hear the differences between those sounds that are important in their language, their set of phonemes, and they begin to not even be able to discriminate the sounds of other languages that are not used in their own.

In trying to understand how that occurs in the brain there is a big clue to how the brain is actually breaking up this system and beginning to represent the individual sounds as neural firing patterns. What we know from other kinds of research, particularly animal research, is something called Hebbian learning:neurons that fire together in time will wire themselves up. And the more often a set of neurons fire together, the more likely it is that they will fire again together and form an easier and easier representation so it will be easier and easier to get that set of neurons to fire off together and wire up together.

It’s believed at this point that the firing together of information in time will bind that information together and say, ‘okay this is a chunk of information which is occurring on a regular basis statistically in your environment, it must be important – pull that together and make it easier for your brain to respond to it’. And we think that has something to do with the basic units in which the brain is going to perceive the phonological building blocks of language.

The Brain and Speech:

Dr. Paula Tallal: So, what does the brain have to do to get to that point? What does it mean fire together? We know that our nervous system is organized in very detailed maps which you have to learn to relate to the features of the environment. So, for example, in the visual modality we have neurons that just fire to certain hues of color and other ones that fire to different line orientations and other ones that will fire to rapid changes in the environment. The same is true of the auditory modality. And in the auditory modality, for the acoustics that we are hearing in speech, we know that they can be broken down to three main categories: 1) the frequency of the sound – how high or how low it is; 2) the intensity of sound – how loud or how soft it is; 3) the duration of sound – how long or how short it is.

There, of course, are also frequency changes because one of the most fundamental characteristics of speech is that it’s created by moving mouths, moving speech articulators. And that creates very rapid frequency changes that are occurring really quickly in time. And we know that we have specific neurons that pick up particular slopes of frequency change going from low to high or from high to low.

As the brain begins to hear sounds, not just speech, but all the other sounds in the environment, it will begin to map itself in a very organized fashion so that the lowest frequencies are right next to the next lower ones and next to the next. In the end what we have is tonotopic representation in the brain. That occurs both in the ear and also higher up in the brain.

If we have a speech sound, it’s actually pretty complicated. An individual speech sound, a syllable like ‘bah’ we just move our mouth. We put it in a certain place like a flute and then we go to whatever vowel we want. We can go ‘buh’, ‘boo’, ‘bo’. They’re all the same in that it begins with closure at the lips, /b/. But the vowel is very different; the vowel can be made up of high frequencies or low frequencies. The trajectory of where we closed our lips to get to the vowel is what’s called a formant transition. And basically that is just a frequency sweep. It’s a series of frequency sweeps that then come to represent those particular speech sounds.

The brain’s job is to pull together those features, the neurons that fire together, including the frequency, the intensity, the time, and these frequency sweeps. When they all fire together they’ll wire up together. And when they occur a lot of times – what a pretty baby you are, what big blue eyes – the /b/ is happening a lot and so the theory is at least that those are going to wire up together. So that’s all fine, for most of us that works out great and you say what big blue eyes, daddy has those blue eyes. Well, the /d/ is not the same as the /b/ obviously. ‘This is your dad’, ‘you are bad’ – those are very similar words, they mean completely different things. Bad and dad are completely the same except at the very onset whether you put your mouth together, your lips together at the front or at the hard pallet. Bad and dad – acoustically they’re almost identical; the whole word is identical except for the first thirty or forty milliseconds. There are lots of words and lots of speech sounds that are different only in terms of one or two acoustic features. Obviously those acoustic features are really important.

Now the other thing that’s really special about speech is that it just keeps on coming at us. People talk fast. Even if you talk slowly you’re still moving these complicated muscles in your entire speech articulatory system and it’s a highly precise movement that will differentiate what the acoustics are that are coming out. So that when I say ‘what a pretty boy you are’, you’ve got all these sounds coming out together, ‘what a pretty girl you are’.

The brain has to follow all those sounds and chunk them out, all the individual pieces, and then put them all back together. That’s the process that needs to be taken care of.

Trouble Learning to Talk or Read:

Dr. Paula Tallal: How does this have anything to do with children who have trouble learning to talk or learning to read? When I first started doing my research in the early seventies, I was interested in trying to understand what the problems at the linguistic level were for these children. What were the problems that they were having learning the grammar of a language, learning how it all worked together, how to express their thoughts and their feelings? But I was trained as an experimental psychologist, and I knew that the first thing you had to do was to rule out more basic problems. So, the obvious things that I would have to rule out was that the children could hear, that they didn’t have a hearing problem, that they weren’t mentally retarded, that they didn’t have any problem with the neural musculature. All the basic building blocks of language I had to demonstrate first were going to be in place before I could begin to understand what was specifically linguistically the problem for these children.

It also occurred to me that there was more to processing the complex acoustic structure of language, ongoing language, than just hearing at the peripheral level. I was very interested in what happened to the sound when it left the ear and moved through the nervous system into the brain. And what are all the different pieces that must be in place to ultimately get to the point that you would be able to organize these acoustic features, put them into words and words into sentences.

I developed a series of processing tasks for little children who were about six to nine years old just to make sure that they could hear fully. They could hear and not only detect that sounds occurred, but also could organize sounds that were occurring; sounds that were more complex, that were brief and occurring rapidly in succession, made up of a combination of frequencies and amplitudes and all the things that would be necessary to ultimately process and represent a speech sound.

Very much to my surprise it turned out that on the whole children who were struggling to learn to talk who did not have a hearing impairment, who were not mentally retarded, didn’t seem to have anything else wrong with them. They were normal, healthy, happy children who seemed to be developing great. They were however quite different in the way in which they organized basic non-linguistic signals, complex auditory signals in general. The building blocks which one would assume you had to put together to come to extract the phonemes of the language really weren’t in place for these children.

Temporal Processing Deficit:

Dr. Paula Tallal: In particular, they seemed to be having difficulty tracking and integrating brief rapidly successive tones of different frequencies. They were having difficulty tracking frequency changes that were occurring rapidly in succession. And that’s rather critical for language since speech is a series of rapidly successive acoustic changes that have to be tracked, encoded, and represented. We did more studies with children with language problems and found that it seems to be a hallmark of many, not all, but many children who are struggling with both oral and written language that they are slow processors. Their brain just needs more time between events to integrate them and track them.

This became known as the temporal processing deficit. Temporal was only a part of the problem. It’s really a temporal spectral processing problem. That means a difficulty tracking acoustic frequency changes occurring over time. And that problem interestingly turns out to be something we can pick out quite early in life in children.

We recently completed a study in which we looked at normally developing infants who are four to six months of age to begin with, just brought a bunch of little healthy babies into the lab, and we wanted to know whether or not we could determine how much time they needed between little tones of different frequencies just to indicate whether they heard the same two signals or two different signals. So, they would come into the lab and we would train them to look to a little toy that was on their right side if they heard beep, BEEP – a low signal followed by a high signal. They were trained to turn their head and look to the left side of the room if they heard beep, beep – the same two sounds. So look over here if you hear two different ones and over here if you hear two same sounds. And of course they don’t know the concept of same or different. These are just normally developing six month old infants, but they can be fairly easily trained to look to this side for beep, BEEP and over here for beep, beep. And they do that because when they look in the right direction this little toy claps its hands and they’re very excited because they’ve controlled the world and that’s what they’re here to do – learn how to do things. It’s all about learning.

When they’ve learned to do that they get happy and they’ll look in the right direction and you can actually determine very well what that individual baby’s threshold is for how fast can these sounds come together for them to continue to perform at a high level of accuracy. You start off with nice long signals with nice long intervals, beep beep, something like that. And then as the baby continues to correctly respond you very subtly begin to adjust, to decrease the time of that silent gap between the end of the first signal and the beginning of the second signal. So, it might eventually become beep beep and then later beepbeep.

What we want to know is how fast do those two come before you’ve lost it? And there is a point for everyone of us when the signals come so fast that you can no longer hear the difference between them. That is called your sensory threshold. So, we wanted to know what that threshold was for each of our little babies. Then we had another group of babies and these were babies that were born into families that already had one or more family members who had either a current or history of oral or written language problems. We’ve done it both ways. We wanted to know if you have a family history of a language learning problem is your ability to track these brief sensory events, the acoustic events different than a baby who did not?

We follow a lot of babies over time and what we do is after we establish a threshold when they’re about six months old we then track these babies and really evaluate these individual children’s language development trajectories. We can see them again when they’re twelve months old and when they’re eighteen months and twenty-four months old and thirty-six months old and just carry on following them up. This work was done by my colleague primarily, April Benasichat Rutger’s University. What we found was that the auditory integration threshold for an individual infant, regardless of whether or not they have a family history of a language learning problem, is the best predictor of language development all the way up to three years old.

Knowing something as simple as how much time you need to organize the information that you hear is highly predictive of language development. Now those children who have very low thresholds – that means they could process very quickly this sensory information, beepbeep, something really fast – those children turned out to develop language more quickly than the children who seemed otherwise to do the task perfectly normally. They could learn it just fine, but they needed just a little bit more time, beep beep, something like that. And then there were other kids who needed a lot more time like hundreds of milliseconds. They needed something like beep beep. They still were doing the task beautifully, but they just couldn’t hear those differences when they went any more quickly than that. Those children turned out to be, frankly, language impaired. But it turned out there was a whole continuum from those children who learned language very quickly to those children who appeared at least at the age of three months old to be at risk for real language problems. And of course now we’re going to continue following these babies up and see whether or not that also predicts whether or not they have difficulty learning to read.

Experience Dependent Brain Development:

Dr. Paula Tallal: So, these early acoustic processing abilities set our brain up for how we’re going to organize the incoming world. Our brains really are experience-dependent learning machines. We need our environment to stimulate the anatomical and physiological properties of the brains we were born with. Without the environmental input there’s very little that’s going to happen for us as humans and even as animals. So it’s clearly a very significant interaction between nature and nurture. We need our own physical environment to stimulate our brains and for language users, in order to break the code for language (which ultimately is a phonemic code, at least for the English language it’s very much a phonemic code for our written language as well) we need to be able to organize the sounds that we hear very quickly in time because the acoustic changes that tell us which speech sound we have just heard are frequency and amplitude changes that occur quickly in time.

Language Processing Underpinnings:

Dr. Paula Tallal: Where does that leave us? It leaves us with knowing a lot about the underpinnings of how the brain begins to process the sensory world, turn that into the phonological representations and turn those into syllables, words, phrases, and ultimately allow us to develop a written code which is the orthography or letters that go with those sounds. We know that when you have trouble anywhere along that route, you’re going to have difficulty with either oral language and/or written language.

A lot of people out there might be saying now that ‘Well, I have a child who’s struggling a lot learning to read but they learned to talk perfectly.’ Well, they don’t always go together and that’s true. There are many, many children who have difficulty learning to read who didn’t have an overt oral language problem. And what I mean by overt is that there are lots of other routes to the same end. You can learn to talk relatively well without becoming that phonologically aware, without really needing to break the sounds down in your mind. It’s only when you hit reading that you must become aware that words are made up of smaller units. But it doesn’t mean that there really weren’t subtle language problems.

One of the most remarkable scientific studies that has been published in my opinion recently was an epidemiological study funded by the National Institutes of Health that showed that if you just screened the oral language abilities of five year olds who are entering public school before you worry about their reading or anything like that, almost eight percent of them were so delayed in oral language development that they would frankly have been given a clinical diagnosis of having a language impairment. But in fact, very few of these children are tested because we allow a lot of individual differences in language development. When a child mispronounces words, it’s very common up to a certain age for everyone to think it’s kind of cute. For example, the child says ‘visketi’ when they meant spaghetti. Or ‘wabbit’ when they mean rabbit. Those are all normal healthy developmental trends up to a certain point in time. But we allow a lot of individual differences and we don’t really know on the whole very well when it is too late to be saying ‘visketi’ or ‘wabbit’ and when it is too late to not have your nouns and verbs go together properly.

What was interesting about this study was that although almost eight percent of the children would have met the clinical diagnosis of specific language impairment, I think it was seventy-nine percent of those children had never been identified by anyone, their parents, teachers, pediatricians, anyone, as being at risk for a language or reading problem. They had the problem, but you know if you pointed out to a parent or something that the child really is delayed in language you would get responses like ‘Oh, I just thought he was shy’ or ‘No, he just doesn’t really pay attention very well’, or things along those lines.

Receptive Language:

Dr. Paula Tallal: I’ve always thought of oral language problems as being the hidden problem, the unrecognized problem, especially for the receptive language part which is really hard to know. What is receptive language? It’s the ability to understand what people are saying. I mean I’m talking right now and I’m having to assume that because people are shaking their heads and nodding that they’re understanding me, but in fact I really don’t know precisely what you do or don’t understand. So, it’s a silent problem when the child’s having a receptive language or a difficulty understanding or one of these perceptual difficulties. If a child’s having difficulty really hearing the subtle differences between bad and dad, well, over time they learn the context. There are some confusing sentences like, that was bad, that was dad – it could have been either way. But most of the time our context really constrains what we’re hearing.

You can get away with an awful lot without anyone noticing until you try to read or spell- at which point the game is up. You either got it exactly or you didn’t. Of course you can read in context and you can guess words as well, but if you’re really being asked to decode for real then you must make that one on one correspondence, you must break the word apart and then put the sounds with the letters.

One of the most telling tests that is used now for determining a child’s readiness to learn to read or whether or not a child is actually having a reading problem is the ability to pronounce non words. What’s a non word? It’s a series of sounds that could have been a word, but just doesn’t happen to be. You take any word and just change a couple of letters. Let’s take the word like rabbit and I can just change the vowel from an A to an I and it would be ‘ribbit’. Could have been a word, just isn’t a word. I could have changed the B’s to double T’s so it could have been ‘rittit’. Could have been a word, isn’t a word.

Tests for Identifying Reading Problems:

Dr. Paula Tallal: Take a series of non words and just ask a child to pronounce them. Give them a series of words starting with real simple ones, ending up with multi-syllable ones and that’s one of the best tests of determining whether a child is going to have a reading problem or is having a reading problem. A better test still is to ask them to read a series of non words. Now what’s the value of that? If you give children a list of real words to read, many, many children who nonetheless have a significant reading problem can pass that test very well. Just like they can look like they didn’t have an oral language problem, they can pronounce the individual words; they can memorize the word patterns from the visual display. But they haven’t really cracked the code. They haven’t really learned that each of the letters has a sound that goes with it and those sounds can change in different contexts.

So, that is one of the better signs of a reading problem, either pronunciation of non words or the reading of non words. And it also helps us to once more understand that it is a continuum between the development of sound systems in the brain into the development of phonological representations into the development of oral language and all the way through written language and spelling. I didn’t say anything about treatment, but we could get into that too.

Timing Critical Processing:

David Boulton: Excellent. One of the most interesting parts in all of this is that you’re describing the infrastructure of processing that’s working underneath human language and how that in different modalities it’s more or less timing critical. So in the case of reading, as an example, there has to be an assembly going on inside the brain and the time it’s taking to put this assembly together is critical to whether the whole process works. It’s quite different than listening to somebody even though underneath both listening and reading many of the same processes are involved.

Dr. Paula Tallal: Right. A lot of people who might have difficulty with this hypothesis or theory would say ‘Well, how does this really work for reading because after all, the word is on the page as long as you want to look at it – it’s static.’ They don’t really get that what we’re really talking about is what the brain has to go through. We really have trouble if you have a timing difficulty in the brain. If your brain is processing information more slowly, then you’re really going to have trouble with on-going language because it just doesn’t wait for you.

Ambiguity in Speech Vs. Code Discrimination:

David Boulton: But in listening to spoken language once you’ve made the discrimination there isn’t any ambiguity.

Dr. Paula Tallal: Right. Exactly.

David Boulton: Whereas with the code there’s lots of ambiguity to overcome in order to assemble the letter-sounds into an inner simulation of language.

Dr. Paula Tallal: Right. Exactly. Even if the word is on the page all day long, the neural processes that your brain has to go through are still the same. You have to extract out the sounds from inside the words and learn to put letters to them. And if you have represented those sounds in a fuzzy way, if your brain doesn’t really clearly hear these very rapid acoustic onsets that differentiate various speech sounds from each other. Then you have a much broader pattern of neurons firing together. And what we really need is nice, neat, concise, effective and efficient –wham! That’s that sound and it’s going to fire and it’s going to fire the same pattern every time or a very similar pattern every time and so then it becomes something that your brain doesn’t have to wait to happen.

For example, acoustically , ‘buh’, ‘duh’, and ‘guh’, are almost all exactly the same except for just tens of milliseconds difference at the onset. And if your brain has kind of lumped together or has fuzzy edges between these different boundaries, it’s going to be a lot more difficult to recognize/hear the differences. The teacher is saying this is the ‘buh’, this is the letter B, it goes with the ‘bah’ sound and your brain sometimes is firing off and hearing ‘bah’ and sometimes it’s firing off and hearing ‘duh’. It’s not surprising that you’re going to end up confusing the ‘buh’ and ‘duh’ and ‘guh’ sound. They are visually confusable, but they are also the most acoustically confusable sounds that we have.

So, there certainly is that whole process going on inside the brain that’s not intuitive. And the problem is that it’s also not intuitive for many teachers. We learn to talk and we even learn to read many times without becoming very aware of how we’re doing it. Very few of us have any idea how our brain is actually processing the speech sounds. It happened so early in our life and is so obvious to us that it’s almost like you cannot fail to process them. And the same thing is true of reading.

Teaching Reading:

Dr. Paula Tallal: When you try to reverse engineer the whole thing in terms of what’s the most effective and efficient way to teach it, that’s where we really get into these reading wars. Different people have completely different ideas of which ways are going to be the most effective and efficient way to teach it. Some people say give them a rich environment around which to learn to read, give the child a lot of very compelling books and stories with a lot of language environment and the child will intuit how to pull out the reading code because it will be so interesting. That’s the whole language approach – to give the child a rich language environment full of good literature.

Then there’s the opposite point of views: no, no, no, you’ve got to teach children very mechanically the letter sound correspondence and you’ve got to mechanically teach the child the phonics of the language in order for the child to become a proficient reader. For the child who can’t hear those acoustic signals very well, who has very poor phonological representations or fuzzy phonological representations, they are probably going to need that very, very explicit training, but it’s also going to be very difficult for them without the context. Of course they really need both.

The child who really has great phonological categories all represented in their brain and also has a good oral language basis and good communicative skills comes to reading and it probably isn’t going to make any difference at all which way you want to teach those children, they’re going to learn to read because they’ve got such a great neurological and environmental base on which to build the written system. So for those children, it doesn’t matter very much which method; they can be immersed in the reading method, both with phonics and the whole language, and they’ll do great.

It’s the kids who are struggling where one needs to understand more individually what is going on for that child and then to try to individually adapt the training that’s best for them based on both their strengths and their weaknesses. Now some people then go and say ‘Well, you really have to teach to a child’s strengths.’ and other people say, ‘No, no, no, you have to teach to their weaknesses.’ And of course you have to come up with ways of doing both.

What we really need to do is understand the underlying problems that these individual children may be having. Its my belief through the use of technology, which can be much more individually adaptive to the child’s learning style, learning pace, underlying learning strengths and weaknesses, we can build an individualized program for them to help them learn through all the multiple routes they might need to become a more effective reader. I really do believe that all children can learn to read, but most children need their own pace and their own particular route through the brain that they’ve developed through their own environment to get there.

ESL:

Dr. Paula Tallal: If English is not their primary language, but it is the language they’re going to be asked to learn to read in, that creates a whole other layer in which we’re going to want to strengthen the oral language skills both at the phonological level, as well as at the grammatical and comprehension level in order to give a good solid base to learn to read and that’s a whole other issue.

David Boulton: Yes, it sure is. Without sufficient oral language proficiency in English teaching people to read it is a real challenge.

Dr. Paula Tallal: Yes, but you know we have public schools that are not in the business of teaching people how to talk. They’re in the business of teaching people how to read. One of our great social challenges is that there are many more children who are coming to school who really do need a direct approach to improving their oral language abilities and their communication abilities in order for them to become proficient readers. And we just don’t have that in most cases. We go right into reading. We do not sufficiently consider that many children have oral language weaknesses, because either the language they’re learning at school is not their native language or because they’re one of these many children who, for unknown reasons or for many different reasons, are just weak at oral language skills. Children with oral language weaknesses have brains that are set up in such a way that is not as effective in the oral language domain. Those children are going to need more explicit help. Unfortunately they’re generally not getting it. They get to school and the first time anyone notices there is a problem is generally when they start to struggle with reading and therefore everyone just immediately assumes the problem is reading and they go right into reading remediation.

Very few children are ever seen for a formal oral language evaluation, which in my opinion is a real problem. I think if a child is struggling with reading, that in addition to their reading evaluation they need an oral language evaluation so that we know where along this whole continuum the child is. And that’s where one needs to intercede to begin with, to move along.

Building Blocks of Reading:

David Boulton: So, one of the critical building blocks is the ability to recognize differences in sound, in time. In the technology world we would call it the ‘analog to digital converter’ where the sound stream is sliced into digital chunks. If the child’s brain is not doing it fast enough then they won’t have the granularity of distinction necessary to build reading upon.

Then once they have that, there’s a minimum frequency rate or speed of processing that is critical to assembling the virtually heard or actually spoken stream. Any one of these things can cause a bog in processing which causes the stutter that breaks down reading.

Dr. Paula Tallal: Absolutely. Right. I hope we can put your words in there, that sounded perfect. That was very good. You certainly got it. You certainly have the hypothesis.

The Code:

David Boulton: Yet it seems that apart from the phonemic awareness distinction and the processing frequency issues that we have been talking about relative to oral language processing, that when we talk about reading, we’re talking about something else which is how all that works in relation to this code.

Dr. Paula Tallal: That’s right.

David Boulton: This code is man-made technology and a lot of our thinking, in both the whole language and phonics systems of thought, are compensations for the confusing relationships between letters and sounds in the code.

Dr. Paula Tallal: Yes.

Ambiguity Processing Takes Time:

David Boulton: One of the things I’d like to draw out in our conversation now is the difference, as you would perceive it as a neuroscientist who’s watching the timing, between the brain processing challenge of reading a phonetic code and the challenges of reading a code that’s not phonetic and that has the degree of letter-sound ambiguity/variability that the English language does. Back to the timing precarious aspect, it seems to me that a phonetic code is much more straight forward from a processing point of view than a non-phonetic code. Particularly in terms of the timing critical brain processing necessary to work out the ambiguity in sufficient time to sustain the stream of reading flow. Can you speak to that?

Dr. Paula Tallal: Okay. What’s interesting is that different languages have more or less transparent orthography. Orthography is the letter system. Now it’s interesting that in some languages there’s really a one to one relationship between the letter and the sound it makes. Spanish is one of those languages. There’s a letter, there’s a sound. And once you’ve learned those letters and those sounds you can read just about any words that you want in Spanish, probably any words you want in Spanish correctly. Even if you don’t understand any of them you can at least pronounce them correctly. English is on the other end of the spectrum it seems with lots and lots of exceptions. I mean, who came up with E-N-O-U-G-H spells enough? It should be E-N-U-F. So, English has many, many exceptions and that of course adds additional complexities.

What I’ve described in terms of this road block, as it were, in the use of the acoustic information which is coming into the brain very quickly in time and having to have that separated out and come up with nice, neat phoneme categories so that you can have those neurons that are firing together wiring together and getting really solidly wired – that’s going to work really well if you’ve got that all wired up for a system like Spanish. And it’s going to work well for English. But it’s not going to work perfectly for English because there’s a whole lot of additional stuff that you have to pull in. So, English is going to be a much more difficult language and therefore we are probably going to have a lot more children struggling to learn it because there are additional brain processes.

The reality is that if you look at these newer technologies that are able to image the brain in real time – not just take a picture of what lit up and subtract it out from everything else, but really follow using full head evoked potential or magnetoencephalography or something that can actually follow the response – in general, a lot of the brain is working to process information as complex as speech. And when you get into reading where you’ve got the language plus the written part to be decoded and reassembled and comprehended, we are really using a lot of parts of our brain.

But the truth is that reading is one of the more complicated of the higher cognitive functions using attention and rate-of-processing and sequencing and memory and the linguistic systems and the visual system and it’s having to coordinate this dance that’s going on. And the more complicated the translation from the orthography to the phonology is to a particular language, the more complicated this processing dance has to be within the brain. I think the search for a single cause, for example, or single basis for reading in the brain, a single spot that’s going to be activated for reading or a single cause why a child may have difficulty learning to read is both fruitless and not likely to ultimately be correct because it’s too much of a dance and it requires too many moving parts.

Reading Illuminates How the Brain Works:

Dr. Paula Tallal: That’s one of the reasons why it’s one of the most fascinating areas of neuroscience. It’s sort of like the last frontier, as it were, other than consciousness. It’s really high level processing that involves many aspects and if we can ultimately break it down we will understand a great deal about how the brain works.That’s one of the reasons there’s so much interest in addition to the human suffering that goes on for those individuals who are struggling with language and reading.

Why is there so much interest in literacy? I mean language really does take us everywhere. If we think about what makes us human and makes us able to function differently, ultimately it is language; first of all language and of course subsequently written language. From the time we’re born our interaction with our parents, our interaction with peers, our interactions with our sense of self are very wrapped up with the language system.

Difficulties in Reading Profoundly Undermine Self-Esteem:

Dr. Paula Tallal: It’s hard to separate language from the development of self-esteem, self-worth and struggling at school. Although I’ve heard many parents say you know, ‘It’s such a frustrating problem: dyslexia or language learning problems because everyone looks at your child and thinks they’re fine. If they just worked a little harder they would be just fine.’ And yet you can see a child literally who started off being fine begin to wither on the vine as they begin to struggle at school and lose so much of their self esteem in the process.

So, this is a major problem. Not only is it a major problem for the long term economic development of the country – which of course is why I think there’s such a huge focus on literacy – but I think it’s also a huge problem for society to recognize the tremendous toll failure in school, which usually manifests itself earliest as failure learning to read, has on the development and the maintenance of self- esteem. On the sense of self of the individuals who have had difficulty learning to talk or learning to read or learning to communicate. Even when they have become successful adults, even doing very, very well, many times if you ask them about their earlier experiences you can still see the pain of that failure – the sense of failure has never left them.

The Beauty in Reading Science:

Dr. Paula Tallal: This is a problem that is so multifaceted and so important and so hopeful because there’s so much now that we have learned. And through that we have been able to develop remarkable improvements in intervention. I believe one of the reasons for studying this particular problem is that this is a problem we will be able to solve, that we are solving. And that’s very, very hopeful. I see this as not only important to understanding how the brain works at the very highest levels, but also as something that we, as scientists, can do to help improve the outlook for individuals who have these problems.

I think all of our ultimate goals, is to really understand this development early in the lives of children, then knowing what we now know about brain plasticity, about the use of computer technologies for training programs, there’s every reason to believe that we can nip this in the bud before the child ever has to experience the loss of self esteem through difficulty in school. That would be very exciting. What else would we want?

The Deeper Importance of Reading:

David Boulton: That’s certainly part of what’s motivating us. We understand that at the same time and perhaps more important than the child’s learning to read for its academic enablement, for its future economic potential and so on, is that this learning to read environment is a learning environment in which they’re learning…

Dr. Paula Tallal: About themselves.

David Boulton: About themselves and how they’re mind functions and how they feel about how their mind functions. And even before the imagery of self-esteem forms as a self-concept feeding back to them, there’s the affect of shame starting to mix into the cognitive process.

Dr. Paula Tallal: Right.

David Boulton: There’s the kind of micro-quantum side of the emotional spectrum in the affective mechanics that are concurring with the cognitive processes.

Dr. Paula Tallal: Right.

David Boulton: And then there’s this downstream self talk story piece.

Dr. Paula Tallal: Yes.

Affect Psychology:

David Boulton: Has your work gotten into the affect psychology side?

Dr. Paula Tallal: Well, I don’t know if you know, but I’m a clinical psychologist as well.

David Boulton: I heard you mention that, so it’s a question I have been waiting to explore.

Dr. Paula Tallal: Yes, so I have in the past done clinical therapy with families with children with language learning problems, as well as other problems. That whole issue of beginning to see the child as the problem, the reading problem, rather than seeing the child as a person and how our society perpetrates that over time and what you can do about that piece of it.

The neuroscience of emotion is just beginning to come together with an understanding of the interplay between the emotions and the cognitive side and probably will tie together in the end through reinforcement systems and through learning systems in the brain.

David Boulton: Are you familiar with Sylvan Tomkins’ and Donald Nathanson’s work? Affect, imagery consciousness, the relationship between affect, a series of precursors of emotion, and their relationship to cognition – how both are involved in everything that’s going on.

Dr. Paula Tallal: Yes.

David Boulton: I’m really interested in getting at how children develop emotional self-assumptions in the field of learning to read.

Neuroplasticity:

Dr. Paula Tallal: I don’t know if I’m the best person to focus on that because I haven’t done that much research there. I know that my colleague Michael Merzenich is doing some work with rats now on neuroplasticity and learning. The reason the company I co-founded is called Scientific Learning Corporation is that Mike’s work developed a whole series of scientific learning principles based on the work that he’s done primarily with animals about what it takes to remap the brain. He’s Mr. Neuroplasticity.

I think that learning is one of the most elegant areas to study in neuroscience because it’s one of the few areas that does have the potential to go from the cellular-neurological level all the way up to the human condition. We haven’t completely been able to do that in terms of bridging the gaps. And there are some bridges that may be too big to jump in our lifetime. But I think the area of learning in particular, through the focus on neuroplasticity, which is how do you change the learning in the brain over time and what are differences between critical periods in which certain types of learning just seem to occur more overtly and what has to be done later if you’re going to try to intervene in a process which doesn’t seem to be as adaptive. So, there may be different approaches that have to be taken to get into the neurobiological part of the system in terms of remapping and rewiring, and of course you need technology probably for that as much as anything.

The Neuroanatomy of Reading:

Dr. Paula Tallal: The medial geniculate nucleus, which is involved in the auditory way station in this cortical loop down in the subcortical area, is a cortical/subcortical feedback system and there are distinct areas or nuclei for the different sensory systems. What’s interesting is within these different nuclei there are two basic types of cells. There’s one set of cells which are called the magno cells and they’re called magno because they’re large cells. And then there’s the parvo cellular system and those are smaller, more compact cells. The magno cellular system is larger because they’re more myelinated. And so, one of the theories is that the magno cellular system is involved in the transient transition of information, the rapid transmission of information, as well as a variety of other features.

Al Galaburda at Harvard has done the only neuropathological studies of the brain at the anatomical level, the cellular level, and on the brains of humans that had been known to be dyslexic before they died and then donated their brains for science and they were really able to study them. Al Galaburda’s work has shown that there’s a disproportionate abnormality in the magno cellular systems in both the LGN, the lateral geniculate nucleus of the thalamus, and the MGN, the medial geniculate nucleus. Therefore that translates back into a neuroanatomical, physiological basis for a difference in the processing rates that those brains might have been able to sustain. Al Galaburda is a really good person potentially to talk with because he’s developed a whole series of animal models to look at what are the effects of having deficits in the magno cellular system and also what are the connections between the magno cellular regions of the thalamus up into the cortex.

John Stein at Oxford has also really developed this magno cellular deficit hypothesis which is one of the focuses right now in dyslexia research at the more physiological level. And it fits very, very well with the temporal spectral processing deficit. In fact, many of the current theories have in common that there seems to be some underlying difference in the processing rates of the individuals with difficulty in processing rapid transient information. Joe Talcottand Carolyn Whitten and various other people who are in John Stein’s lab at Oxford have published some wonderful papers in the proceedings of the National Academy of Sciences recently, I think in 2000, that really fit well with the study I talked about with infants. Basically, it showed that the ability to process transient visual and/or auditory information is very highly correlated across the spectrum of individual differences and different levels of reading ability.

Core Processing Frequency:

Dr. Paula Tallal: The visual transient processing seems to be highly correlated with orthographic abilities and the auditory transient processing abilities seems to be highly correlated with the phonological decoding abilities of children. And this is across the spectrum.

David Boulton: And implicate in both is the core processing frequency and the ability to make distinctions.

Dr. Paula Tallal: Yes, exactly. That’s what it’s all about. It implicates core processing frequency and the ability to make distinctions both in the auditory and the visual modality. And then how those come together. So, they’re highly correlated with each other.

What was really interesting in the Talcott et al paper was what they showed about the ability to process transients in non-linguistic stimuli, tones and the rate of modulation, frequency modulation within tones, and what the threshold is for hearing a wavy tone versus a straight tone. Because when it goes whooooo and when it has whoo too fast, all of a sudden you start hearing a solid one.

David Boulton: Right, it clips off.

Dr. Paula Tallal: For normally developing children the individual child’s threshold on transient processing was as highly correlated with their phonological awareness and phonological decoding abilities as their reading and spelling were correlated with each other. It’s really very high levels of correlation.

Exercises To Speed Up Processing Rates:

David Boulton: So we may see at some point new kinds of exercise toys for children that just draw out exercising the frequency of distinction in a more implicate and general way to the various senses that are engaged in their play.

Dr. Paula Tallal: Right. As these processing rates seem to be so fundamental and so highly correlated, both predictive correlations from infancy to childhood and also concurrent correlations throughout life with your phonological abilities, your language abilities, your reading abilities, then the question arises: Is it possible to drive these rates of processing to make finer and finer distinctions in the brain? This became one of the underlying goals of the Fast ForWord training programs.

We use computers to basically do those experiments. We have found that in fact it’s amazing that one is able to drive up processing rates quite substantially across the spectrum. You can make just about anyone a faster processor, and it can be very important for the individuals who might be struggling with language and literacy skills because their processing rate may have been slower at a certain point early in their life when it was important. And you can drive them to faster rates of processing and then look to see whether or not language, subsequently, and reading skills subsequently improve and viola, they do.

David Boulton: It would seem to me there would be two fields where this would be applicable. One would be to having a faster frequency so as to be able to make the distinctions fast enough to stay with the spoken language variations going on in the world.

Dr. Paula Tallal: Right, right.

David Boulton: And the second, to have a higher frequency with which to go through the disambiguation that’s involved in assembling the reading stream because on the one hand, we’ve got these distinct phonemic elements which are required…

Dr. Paula Tallal: Right.

David Boulton: On the other hand, before we can recognize and comprehend the words we read our brains has to assemble them.

Dr. Paula Tallal: That’s right, back together.

David Boulton: Which if there’s ambiguity in the distinctions that have to be processed through in the assembly…

Dr. Paula Tallal: Right. Exactly.

David Boulton: So the more that there’s ambiguity in-between the letters and sounds, the more there’s brain time consumed in working it out.

Dr. Paula Tallal: Right.

David Boulton: So our approach is to increase the speed of the processing and wherever possible decrease the unnecessary ambiguity that is overwhelming the process.

Dr. Paula Tallal: That’s exactly right. And that is exactly coming at the problem from both ends that we’ve used in the development of Fast ForWord. Exactly. You got it. That’s exactly what we tried to do: both increase the processing capacity and also decrease the ambiguity in the signal itself by acoustically altering it so that these rapidly occurring frequency changes over time are slowed down and amplified. Then, as the individual progresses in the various levels of language and language to reading and reading, one can begin to drop out these additional cues, while at the same time having literally rewired the processing constraints the kid had to begin with.

How do we know that we really have literally rewired it? Because we now have the advantage of technological imaging in non-invasive imaging in humans both through evoked potential electro-physiological methods and also through functional neuro-imaging methods. Both magnetic imaging methods have been able to show that, indeed, not only are the children behaviorally processing more quickly, their language and reading scores on standardized tests are improving. And the differences between their brains and the brains of children who are better readers are becoming less distinct over time. So those areas that are more involved in certain aspects of the reading task, particularly the phonological aspects, which are distinctly different between a group of children who are struggling with reading and a group of children who are reading really well are diminishing.

Over time, if your methodologies of intervention are working, one should be able to determine that using not only behavioral methodologies works, but also physiological and neuro-imaging. That’s a real advance. This is brand new data. For the first time we’re beginning to see studies in which one is able to actually evaluate the effectiveness of reading interventions or other types of interventions. Very similar kinds of approaches are being used with patients with stroke, both for the motor systems, the language systems, and the perceptual system and sensory systems. Particularly, we’ll see more and more studies coming out in which these neuro-imaging procedures are being used as assessments for the efficacy; first of all assessments for a deficit, and then used as an additional way of evaluating efficacy of one method over another. It’s a new world.

David Boulton: What they seem to be having more and more in common is the processing speed at the center. And that as you improve that through the exercise in any one domain or venue there’s seems to be a general transfer because processing speed is implicate in everything.

Dr. Paula Tallal: Right. I believe that processing speed is implicated in everything but I wouldn’t say that all of my fellow scientific colleagues agree with that. I often say which would you rather have, a slow kid or a quick kid? Very few people will choose the slow kid.

David Boulton: That depends on their kid that day.

Dr. Paula Tallal: Right. It’s a colloquial way of putting it, but basically to have a more rapid, more efficient brain is one that should translate into being able to process the kind of information that is involved in language and reading systems more effectively.

Evolution Did Not Wire Us to Read:

David Boulton: When you say language and reading systems… clearly they’re part of the same continuum. But human beings have been using language as we understand it, genetically and from fossil records in terms of the structure of the face and the ability to articulate and so forth, for 100,000 to 200,000 years. Reading, however, as we do it with an alphabet is only 3,500 years old. Reading and is a technological contrivance, an artifact that sits on top of language.

Dr. Paula Tallal: Right.

David Boulton: And only a small percentage of the population, up until very recently, was ever concerned with reading or writing.

Dr. Paula Tallal: Right.

David Boulton: So there is no evolutionary adaptive process.

Dr. Paula Tallal: No. That’s a very important point. Although I talk a lot about the language to reading continuum, it doesn’t mean that I assume that through evolution that reading will eventually becoming something built-in like languages. You don’t have to explicitly teach language. That does not mean that language is not learned.

There’s a lot of confusion, I believe, even in the scientific literature about that. Because we have more innate processes that facilitate the learning of language does not mean that it doesn’t still have to be learned in the brain, because we know that a deaf child does not automatically learn oral language. There are certain features of communication that occur within signs that will occur even without any kind of formal training.There’s a motivation to communicate that’s going to occur, but language does have to be learned in the brain.

There’s no question that reading is a much newer and contrived system that was developed explicitly to be able to transmit the code of language in a more simplistic way and that has to be explicitly taught. Now, that doesn’t mean that some children don’t intuit it. You know, you can leave some children alone with lots of written information and together with feedback, many children will break the code by themselves. So, it doesn’t mean that we don’t have the capacity to do so. But reading is much more of a learned and explicitly taught system and most children need to be explicitly taught how to break that code.

David Boulton: With respect to the percentage that can take to reading without much intervention from the outside, about twenty percent at best, would you say that there’s a strong correlation with their frequency of processing?

Dr. Paula Tallal: I don’t know.

David Boulton: Have there been any studies to try to correlate those two?

Dr. Paula Tallal: No, there haven’t been.

David Boulton: I think that would be really interesting.

Dr. Paula Tallal: That would be an interesting thing. But you know there’s too much intervention already. I think that the exposure to print is probably going to be a very important variable that won’t be able to be controlled in the kind of study you’re talking about. The sheer exposure to print – and that’s why there’s so much emphasis on reading your child stories. I would have equal emphasis on nursery rhymes, telling and repeating and playing around with nursery rhymes because they rhyme. Rhyming is a very important aspect of learning how to break the code because it teaches you to listen to the sounds that words make and that is a big part of the code.

Reinforcing Learning:

Dr. Paula Tallal: There’s a reason why children like to hear the same book over and over and over again and that is because consistency is important for the brain when you’re a little kid. What you’re looking for is the consistency, not so much the message. A lot of parents go, ‘I can’t believe he wants that book again every night’. When you’re reading you might be into the story that the book is telling. The child is at a point in their life probably where they’re into the sound. And they’re looking for that consistency in the sound and that’s why nursery rhymes are so wonderful and the books that rhyme, the Dr.Seuss books and all those that rhyme, are very, very appealing to the young children because it is reinforcing to the brain.

What reinforces a brain? I believe the ability to process reinforces the brain. If your brain is processing and getting reinforced for that processing it brings a sense of well being; it brings a sense of being in control of your brain. I believe that one of the real issues for children with attention deficit in many cases is that they’re brain is actually struggling to process the information that it’s getting. When your brain is struggling and can’t get a sense of consistency it’s very disconcerting.

What Happens When You Can’t Trust Your Brain:

Dr. Paula Tallal: I always suggest to parents to think about sitting in a classroom where everybody is speaking Chinese and they don’t know it. Or maybe they know a little of it, which is even worse in some respects. How long can you pay attention? What do you feel like when someone’s out there talking and you got a bit of it, but you missed the details and all the other kids are kind of flipping to the correct page and getting started and you don’t know how to get started.

What happens to you when you can’t trust your own brain to take care of this for you? What are the kind of defense mechanisms you might develop? Attention problems, impulsivity, acting out, being the class clown – anything is better in many ways for your self esteem and sense of well being than believing that you can’t trust yourself to even process the information in your world. That’s very scary. So, I think that you develop these other mechanisms.

Shame Avoidance:

David Boulton: This is shame avoidance.

Dr. Paula Tallal: It’s a shame avoidance to one self. It’s not only the external. I think we often think about the child who is developing behaviors to cope in terms of how other people are going to treat them, that’s certainly important. But I think ultimately it comes down to how you feel about yourself and can you trust yourself to get through the world, to keep you safe, to perform well, to make you feel good about yourself. And a lot of that has to do with automatic processing and automatic control of the information that’s coming into your world. I think a lot of children who are struggling with that will develop a lot of compensatory behaviors to try to gather a sense of being in control. Even if it makes them get in trouble, at least they were in control of getting in trouble. Whereas they cannot be in control of failure if they really can’t do it, and that feels a lot worse. That’s just a theory, it’s not scientific.

David Boulton: This where affect science comes in. It’s an important connection.

Dr. Paula Tallal: Yeah. That’s why I’m saying some of this, and though this is not my scientific theory, it is my experience with doing psychotherapy with families and children. One of the things that has been so interesting is family therapy. When a family comes in primarily because they have a child who has a learning disability and you get started talking about the child what you often find is that there’s become so much focus on the learning disability for this child that the rest of the family, even the brothers and sisters, lose track of the other qualities of the child. If you go around the room and you ask everyone to say something positive or good about what each person is good at, for the other kids in the room in the family will say ‘He does this, he does that well’ or whatever. But when you get to the child with the learning disability they’re always trying to figure out something academically that that child is good at. ‘Well, that’s kind of tough, yeah, he can kind of do math pretty well.’ So this kid has just become the academic part of himself. So, you say well isn’t there anything else this child does well? ‘Oh I don’t know, he’s not really good at spelling and he’s terrible at reading.’ Well, what about other things this child may do well? ‘Well, kind of okay at geography…’ and you finally say…

David Boulton: This is the parent version of teach to the test though, isn’t it?

Dr. Paula Tallal: Yes, exactly. And then you finally say well does he have any friends? ‘Oh yeah, people just really like him, you know, he’s so nice and the neighbors say…’ Well, doesn’t that count? ‘Oh I thought you just meant about academics.’ They have gotten so focused because the school has gotten so focused and everything has become about this one area the kid is not good at, at the exclusion of all these other abilities this child does have. Many times the therapy is about rebalancing for the parents.

One of the things I often tell kids in private is, ‘You know what? When you grow up no one is ever going to test your reading again.’ And sometimes getting the parents involved in doing the testing has been really interesting because we do family genetic studies and test all the members in the family and just to see them remember what it was like to be tested. A lot of times they’ve forgotten how uncomfortable it is.

Most of Our Children Are Learning to Feel Ashamed of Their Minds:

David Boulton: Speaking of tests, the 2002 National Reading Report Card effectively says that sixty percent of our twelfth graders are below the level of proficiency, sixty-nine percent of fourth graders. This is not a minor part, this is the majority. We could say most of our children are less than proficient in reading even after twelve years of our attempts to teach them, on the one hand. On the other hand, we’ve got NICHD saying that the first consequence is damaged self-esteem, that children become ashamed of themselves in this whole reading experience. So, it seems to translate to me that our education system and our approach to reading, in general, is teaching most of our children to be ashamed of their minds.

Dr. Paula Tallal: Yes. Well the statistics on the negative sequela of failing to read are horrifying. And we continue to think about this as a small problem. It’s not life threatening, that’s for sure, but it can be life destroying for a lot of individuals and we tend to forget that.

It’s remarkable that’s there’s no insurance coverage for most of the families who have children with these problems. There are very few physicians who are more than just aware of the problem. There are very few individuals who specialize in this problem. Many parents have said to me, ‘If I had a child with any other problem I would have some high level professional with a medical degree to take this child to to deal with the problem. But if my child has an educational problem I’m on my own with the teacher’ – who are very well meaning, but many times don’t really know what to do with the children who have a lot of difficulty in this area.

At the same time, we know remarkably well that if you know a child’s reading ability by the third grade you generally have a very good ability to predict whether they’re going to graduate from high school or not and what their abilities are going to be and what their future is going to be. That’s the reason we hear all children will read by the third grade, we know that’s highly predictive.

What are the rest of the statistics? I don’t know if you want to hear the horror statistics, but it’s quite remarkable. I had the opportunity to give a report to the Congressional Bio-Medical Research Caucus so I went into looking at the statistics. It’s something like every public major concern has a much higher incidence of reading problems attached to it from juvenile delinquency to teen pregnancy to failure to graduate from high school to drug problems. You take anything that we say is a major concern and there is a higher than expected incidence, by far, of individuals who have struggled with reading or have a frank learning disability.

It costs twice as much in real dollars to educate a child in special education than in regular education and yet through the history of maintaining statistics and all the additional money that has gone in we have seen a flat line in terms of the actual effectiveness of our current ability to remediate these issues. And yet we know that all these other problems are on the rise as we have more and more children who are having difficulty in learning to read. We do have a national crisis, well probably an international crisis, but we do have a major crisis in the United States.

Reasons For Optimism:

Dr. Paula Tallal: As I say, on the one hand that’s extremely disturbing and extremely pessimistic in terms of the statistics. But it’s also one of the most optimistic areas that we have because we have new technologies and new research. Tremendous progress is being made and there’s a real sense that this is one of those problems that we probably will be able to fix. We will at least take a big bite out of problem through our advances in understanding what the underlying etiologies are, how these problems come to be from the neurobiological point of view, from the sociological point of view, and from the psychological effects that they have on children. We are seeing tremendous improvement with new approaches and new interventions. So I think it is one of the more hopeful areas of research these days and for society as well.

What’s Discouraging:

Dr. Paula Tallal: Can we teach all children to read by third grade? That’s going to be a matter of enhanced translation of what we’ve learned from research and the output of that into the institutions that we have that train children, the public schools. One of the most discouraging areas is how long it takes to translate that research into the classroom. But, as I say, the hopeful area is that it is really beginning to happen and with tremendous improvements being seen.

Message to Teachers:

David Boulton: As a final wrap up here imagine that could talk to teachers, parents, and people who are actually working with children who are witnessing this struggle that children are going through. Is there anything you want to say to them that might be helpful in reframing or otherwise directing their efforts? Is there any one thing you’d like to tell teachers?

Dr. Paula Tallal: The one thing I’d like to tell teachers is I think there’s a tremendous amount of information on how the brain learns and particularly how the brain learns language and reading that can be obtained on the web these days. There’s some wonderful websites that are done just for that and are just for teachers like Brain Connection. I think teachers very much want to increase their understanding about neuroscience and how the brain learns. A lot of that information is available through conferences and websites.

The other thing I would say to teachers is to trust your instincts – on the whole they’re excellent. Seek out those new technologies that can be real aids to you in helping the children who are really struggling to crack the code. If there was something that you could have done to help many of these children, then I know it would have been done. It’s not for lack of trying. That leads me to believe that there are certain things that need the aid of technology to really facilitate in partnership with good teaching to help many of these children who are struggling.

David Boulton: Thank you.

Dr. Paula Tallal: You’re welcome.